Artificial intelligence models have grown in size and complexity, offering outstanding results in everything from language translation to image recognition. However, their heavy computing needs make them difficult to run on everyday devices. In response, the AI community has developed a solution: distilled models. These are smaller, more efficient versions of large models that maintain most of the original accuracy while being faster and lighter.

Distilled models are becoming increasingly popular in production environments, especially when there is a need to balance performance with speed and hardware limitations. This post explores what distilled models are, how they work, and why they are a practical breakthrough in the world of machine learning.

What Are Distilled Models?

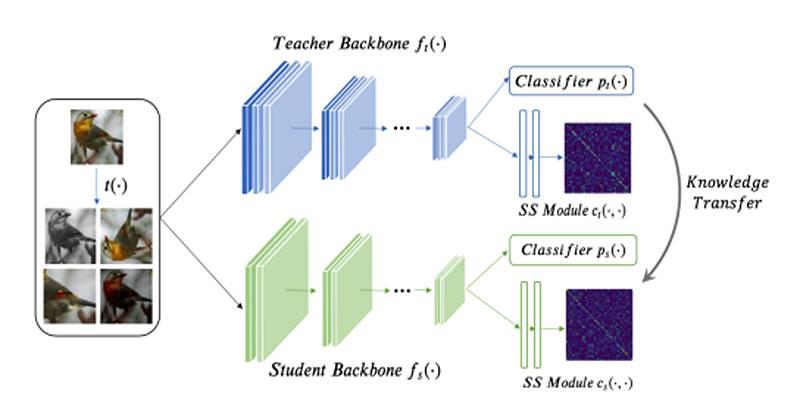

Distilled models, often developed through a process known as knowledge distillation, are compact neural networks trained to replicate the performance of larger, more complex models. The larger models, often referred to as teacher models, are typically state-of-the-art in accuracy but are also slow and resource-hungry.

In order to get around this problem, a student model is taught to act like the teacher model. Even though the student is smaller than the teacher, he learns to guess what will happen in a way that is very similar to the teacher. In this way, the student model turns into a light option that works well in real-time situations and on devices with few resources.

Why Are They Called “Distilled”?

The word "distilled" comes from the chemistry term "distillation," which means to get to the essence of a material and concentrate it. In the same way, machine learning takes the information and ability to make decisions of a big model and puts it into a smaller one.

Rather than learning from raw data alone, the student model also learns from the outputs of the teacher model. These outputs, known as soft targets, contain richer information than traditional labels, allowing the student model to generalize better even with fewer parameters.

How Does Knowledge Distillation Work?

Knowledge distillation typically involves three key stages:

- Training the Teacher Model

A large and accurate neural network is trained using labeled datasets. This model serves as a reference point for the next steps. - Generating Soft Targets

The teacher model predicts probabilities for each possible class instead of simply giving the correct answer. These probabilities, or “soft labels,” reflect how confident the model is about its predictions. - Training the Student Model

A smaller model is trained using both the original dataset and the soft targets from the teacher. The goal is to mimic the teacher’s behavior as closely as possible while using fewer parameters and layers.

Examples of Distilled Models

Many companies and research teams have built distilled versions of popular models.

Here are a few well-known examples:

- DistilBERT: A smaller version of BERT used in natural language tasks.

- TinyBERT: Another compact BERT model used in mobile apps.

- MobileNet: A lightweight model for image recognition on smartphones.

- DistilGPT-2: A distilled version of GPT-2 is used for faster text generation.

These models show that it’s possible to reduce size and cost without losing much performance.

Advantages of Distilled Models

Distilled models offer a range of benefits that make them ideal for modern AI applications, especially in environments where resources are limited.

Key advantages include:

- Reduced Model Size

Distilled models take up significantly less space, which is ideal for mobile and embedded systems. - Faster Inference Time

These models deliver predictions quickly, enabling real-time applications like voice recognition and video analysis. - Lower Resource Consumption

They require less memory, power, and processing capacity, which makes them suitable for edge computing. - Deployment Flexibility

Since they can run on a wider range of devices, they’re more practical for industries that need scalable AI solutions. - Comparable Accuracy

While smaller in size, well-distilled models often retain much of the original model's accuracy.

Where Are Distilled Models Used?

Distilled models are making a real-world impact across various sectors. Their ability to run efficiently without compromising much on performance has opened the door to widespread use.

Common use cases include:

- Mobile Applications

From AI-powered photo filters to language translation apps, distilled models enable fast and smart features on smartphones. - Virtual Assistants

Assistants like Siri or Google Assistant use lightweight models for quick responses without relying heavily on server power. - Healthcare Devices

In wearable tech and portable diagnostic tools, distilled models allow real-time analysis, even in remote or low-infrastructure settings. - Autonomous Vehicles

Real-time object detection and decision-making require fast and efficient models that distilled architectures can support. - Customer Service Bots

Chatbots trained using distilled NLP models deliver smoother conversations and faster responses.

Tools and Libraries That Support Distillation

Several open-source tools have made the process of knowledge distillation more accessible to developers and researchers. These libraries offer out-of-the-box support for training student models from teacher models.

Popular frameworks include:

- Hugging Face Transformers

Provides pre-trained distilled versions of popular models like DistilBERT and DistilRoBERTa. - TensorFlow Model Optimization Toolkit

Offers tools for pruning, quantization, and distillation for efficient deployment. - PyTorch Knowledge Distillation Libraries

Community-contributed libraries support easy implementation of distillation workflows.

Challenges and Considerations

Despite their advantages, distilled models come with their own set of limitations.

Challenges include:

- Slight Drop in Accuracy

In some cases, student models might not fully capture the depth of the teacher’s learning. - Complex Training Process

Setting up a teacher-student framework adds complexity to the training pipeline. - Architecture Constraints

Designing an effective student model requires careful tuning and sometimes trial and error.

These trade-offs are important to consider when deciding whether to use distilled models in a given application.

Conclusion

Distilled models represent a smart compromise between performance and efficiency in the field of AI. By capturing the essential knowledge of large models and compressing it into smaller ones, this approach enables practical deployment across devices and industries. Whether it’s powering a chatbot, enhancing smartphone features, or supporting medical diagnostics, distilled models are redefining what’s possible with machine learning on the edge. In a world where fast, accurate, and energy-efficient AI is becoming a necessity, distilled models offer a path forward that’s both intelligent and accessible.