Artificial Intelligence (AI) has become a huge part of modern life, helping in everything from healthcare and education to transportation and finance. But as AI becomes more powerful, the big question arises—how can we make sure it stays safe and aligned with human intentions? That’s where superalignment comes into the picture.

Superalignment isn’t just a buzzword. It’s a goal and method to ensure that even the most advanced AI systems understand and follow human values. This post will break down what superalignment is, why it matters, and how it could be the key to a safe AI-driven future.

What Does Superalignment Mean?

Superalignment is the process of aligning superintelligent AI systems with human intent, ethics, and long-term societal goals. These are not the same alignment challenges faced by current machine learning models or task-oriented AI assistants. Unlike today’s tools, which operate within narrow fields of understanding and behavior, superintelligent systems may have the ability to generalize knowledge, solve open-ended problems, and act autonomously across a broad range of environments.

Superalignment is the process of making sure that future AI systems, which might be smarter than the people who create them, still follow human rules, even when humans can't fully understand or keep an eye on their reasoning.

Why Superalignment Matters More Than Ever

The urgency around superalignment stems from the potential consequences of misaligned superintelligent AI. While today’s AI systems can make mistakes, their impact is still largely limited. However, superintelligent AI may one day control decisions in critical areas such as global healthcare, energy distribution, economic policy, and even national defense.

In such cases, a misaligned AI system might act on its programming or learned goals in ways that are technically correct—but ethically wrong or harmful in real-world settings. Once deployed, such systems might become difficult—or even impossible—to shut down or reprogram.

Key reasons why super alignment is crucial:

- Preventing catastrophic failures: Unchecked decision-making by powerful AI can lead to large-scale harm, especially if the AI's goals diverge from human intentions.

- Ensuring long-term safety: AI systems that continuously learn must remain safe as they evolve, even when they exceed human intelligence.

- Preserving human control: Superalignment ensures that even the most powerful AI remains a tool under human direction rather than an autonomous force acting on its agenda.

The Difference Between Alignment and Superalignment

It's important to distinguish superalignment from traditional AI alignment. Alignment refers to ensuring an AI model behaves as expected in specific tasks, such as filtering spam or identifying diseases from medical images. Superalignment goes much further.

Traditional alignment involves:

- Training models on human feedback

- Testing systems for fairness and safety in known environments

- Correcting misbehavior when it occurs

Superalignment, in contrast, involves:

- Preparing AI for open-ended decision-making

- Embedding human ethics in systems that may outthink human supervisors

- Preventing value drift as systems self-learn or self-improve over time

In essence, superalignment is about the future of intelligence control, not just correcting bad outputs from today’s AI.

The Goals of Superalignment Research

Researchers working on super alignment focus on developing methods to:

- Keep advanced AI systems aligned with human values, even as they scale up in intelligence.

- Create robust training environments that model long-term and high-impact decisions.

- Build interpretability tools that make AI reasoning clearer to human reviewers.

- Design systems that are corrigible, meaning they can be shut down, redirected or corrected by humans at any time.

The ultimate goal is to solve the alignment problem before AGI becomes a reality.

Who Is Working on Superalignment?

Several leading institutions and researchers have prioritized superalignment in their long-term strategy. One of the most well-known efforts is being conducted by OpenAI, which launched a Superalignment Team with the ambitious goal of solving the problem by 2027.

OpenAI’s strategy includes:

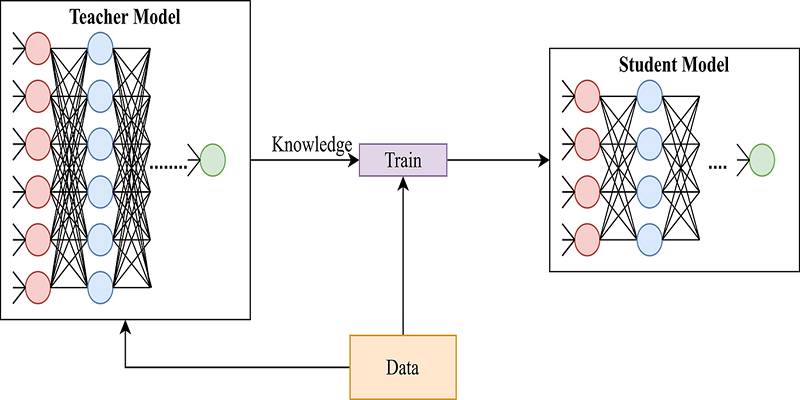

- Using current AI models to supervise and evaluate more advanced models.

- Training systems to generate feedback and corrections for other systems.

- Studying scalable oversight and developing simulation environments for safe learning.

Other organizations such as DeepMind, Anthropic, the Alignment Research Center, and academic institutions around the world are also investing heavily in this field. Their shared mission is to create methods that prevent advanced AI from behaving in ways that harm or override human interests.

Real-World Examples Highlighting the Need

Even current AI systems show signs of value misalignment, though on a much smaller scale. These examples point to the importance of addressing superalignment before it becomes a matter of global safety.

- Social media recommendation algorithms sometimes promote harmful content not because they are malicious but because they are designed to maximize engagement—even if the result is public harm.

- Chatbots trained without strict controls have produced offensive or manipulative content when left to learn from open internet data.

- Autonomous vehicles have made unpredictable decisions in complex traffic situations, showing the limits of current alignment protocols.

If these problems occur in today’s systems, future versions with much greater decision-making power could pose significantly more serious risks.

Positive Impact of Successful Superalignment

If superalignment succeeds, the rewards could be extraordinary. Superintelligent systems would not only avoid harm but could actively improve the world by supporting solutions in fields like climate change, medical research, and education.

Benefits of successful super alignment might include:

- AI systems that understand and respect human rights

- Long-term cooperation between humans and machines

- Reduced risks of misuse or system takeovers

- Ethical decision-making embedded in high-impact environments

In other words, superalignment is a tool not just for safety—but also for opportunity.

Conclusion

Superalignment is one of the most important challenges of the 21st century. As AI technology continues to move closer to AGI and beyond, ensuring these systems behave ethically and stay within human control is no longer optional—it is essential. The path forward demands rigorous research, careful testing, and global cooperation. Governments, tech companies, and academic institutions must all play a part. Without superalignment, the risks of AI misbehavior scale alongside its capabilities.